Does a Continuous Probability Distribution Have to Approach Zero

We consider distributions that have a continuous range of values. Discrete probability distributions where defined by a probability mass function. Analogously continuous probability distributions are defined by a probability density function.

(This is a section in the notes here.)

Definition [Probability Density Function] A probability density function (pdf) is a function that has two properties

- (Positive) For

- (Integrates to one)

From this we can define the following.

Definition [Continuous Probability Distribution] A random variable with values in

has a continuous probability distribution with pdf

if

As before, is called the cumulative distribution function (CDF). As before it satisfies

and also it satisfies

A key observation is that when making the conceptual switch from (discrete) probability mass functions to (continuous) probability density distributions, we have replaced summations with integration. This is the main difference, and since most properties of sums apply to integrals1 many properties follow over for continuous random variables.

A few observations. Notice that the Equation is a consequence of the Fundamental Theorem of Calculus. Note while a pmf must be bounded above by , in principle, a pdf can be unbounded.2 Notice we may want to restrict a continuous random variable to a range of values. For example, we may want to assume that our random variable is positive, in this case the pdf will satisfy

for

. Notice it does not make sense to think of a continuous random variable as taking any specific value since the integral of a point is zero. Instead we often think of the random variable belonging to some range of values. For instance, for

, we have

Joint distributions. We can consider the pdf for two random variables (or more). If ,

are continuous random variables (defined on the same probability space) then their joint pdf is a function

such that

and from this  If

If and

are independent then the joint pdf is the product of the pdfs

All other the above extends out to more than two random variables in the way you might naturally expect. E.g. the pdf is a function of the form

.

Expectations

Analogous to the expectation in discrete random variables we have the following definition.

Definition [Expectation, continuous case] The expectation of a continuous random variable is given by

![\begin{aligned} \mathbb E [ X] := \int_{-\infty}^\infty x f(x) dx \, . %\end{aligned}](https://appliedprobability.files.wordpress.com/2021/11/c6710ec49d4fe7dd754bb77344a41f76.png?w=840)

Similarly the variance is defined much as before ![\begin{aligned} \mathbb V( X ) = \mathbb E [ (X-\mathbb E[X])^2] = \mathbb E[X^2] - \mathbb E[X]^2 = \int_{-\infty}^\infty x^2 f(x)dx - \left( \int_{-\infty}^\infty x f(x)dx \right)^2. %\end{aligned}](https://appliedprobability.files.wordpress.com/2021/11/39143bb80e08e69cbb6260fcb42f0825.png?w=840)

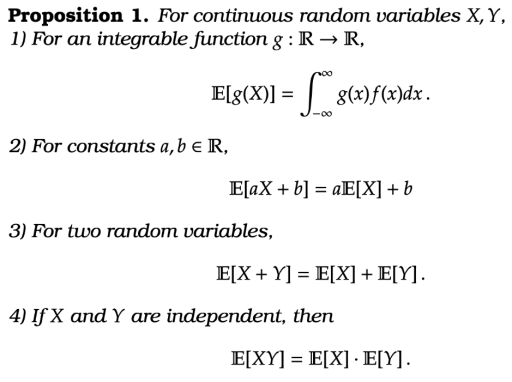

The following proposition is an amalgamation of the lemmas that we had for discrete random variables.

The proof of the above result really follow by an almost identical proof to the earlier discrete results. Just replace the summations with integrals. For that reason we omit the proof of this proposition.

The Normal Distribution

The normal distribution arrises in many situations involving measurement. E.g. the distributions of heights, the relative change in a stock index, the measurement of physical phenomena (e.g. a comet passing the sun), the result from an election poll, the distribution of heat.

The normal distribution is, perhaps, the most important probability distribution. Why is this? Well roughly because it is the distributions that arises when you add up lots of small independent errors. This is more formally states as a result called the central limit theorem, which we will discuss shortly.

Definition [Standard Normal Distribution] The standard normal distribution has probability density function  for

for . If a random variable

is a standard normal random variable we write

. The cumulative distribution function is

It can be shown that a standard normal random variable has mean and variance

. By shifting and scaling we can acheive other values for the mean and variance.

Definition [Normal Distribution] The normal distribution with mean and variance

has probability density function

for . If

is a normally distributed random variable with mean and variance

then we write

.

An useful point is that  Thus we see that a normal random variable is simply a standard normal random variable that has been rescaled (by

Thus we see that a normal random variable is simply a standard normal random variable that has been rescaled (by ) and shifted (by

).

Source: https://appliedprobability.blog/2021/11/18/continuous-probability-distributions-2/